前言

学习了Inception V3卷积神经网络,总结一下对Inception V3网络结构和主要代码的理解。

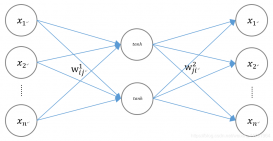

GoogLeNet对网络中的传统卷积层进行了修改,提出了被称为 Inception 的结构,用于增加网络深度和宽度,提高深度神经网络性能。从Inception V1到Inception V4有4个更新版本,每一版的网络在原来的基础上进行改进,提高网络性能。本文介绍Inception V3的网络结构和主要代码。

1 非Inception Module的普通卷积层

首先定义一个非Inception Module的普通卷积层函数inception_v3_base,输入参数inputs为图片数据的张量。第1个卷积层的输出通道数为32,卷积核尺寸为【3x3】,步长为2,padding模式是默认的VALID,第1个卷积层之后的张量尺寸变为(299-3)/2+1=149,即【149x149x32】。

后面的卷积层采用相同的形式,最后张量尺寸变为【35x35x192】。这几个普通的卷积层主要使用了3x3的小卷积核,小卷积核可以低成本的跨通道的对特征进行组合。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

def inception_v3_base(inputs,scepe=None): with tf.variable_scope(scope,'InceptionV3',[inputs]): with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],stride=1,padding='VALID'): # 149 x 149 x 32 net = slim.conv2d(inputs,32,[3,3],stride=2,scope='Conv2d_1a_3x3') # 147 x 147 x 32' net = slim.conv2d(net,32),[3,3],scope='Conv2d_2a_3x3') # 147 x 147 x 64 net = slim.conv2d(net,64,[3,3],padding='SAME',scope='Conv2d_2b_3x3') # 73 x 73 x 64 net = slim.max_pool2d(net, [3, 3], stride=2, scope='MaxPool_3a_3x3') # 73 x 73 x 80 net = slim.conv2d(net, 80, [1, 1], scope= 'Conv2d_3b_1x1') # 71 x 71 x 192. net = slim.conv2d(net, 192, [3, 3], scope='Conv2d_4a_3x3',reuse=tf.AUTO_REUSE) # 35 x 35 x 192 net = slim.max_pool2d(net, [3, 3], stride=2, scope= 'MaxPool_5a_3x3') |

2 三个Inception模块组

接下来是三个连续的Inception模块组,每个模块组有多个Inception module组成。

下面是第1个Inception模块组,包含了3个类似的Inception module,分别是:Mixed_5b,Mixed_5c,Mixed_5d。第1个Inception module有4个分支,

第1个分支是输出通道为64的【1x1】卷积,

第2个分支是输出通道为48的【1x1】卷积,再连接输出通道为64的【5x5】卷积,

第3个分支是输出通道为64的【1x1】卷积,再连接2个输出通道为96的【3x3】卷积,

第4个分支是【3x3】的平均池化,再连接输出通道为32的【1x1】卷积。

最后用tf.concat将4个分支的输出合并在一起,输出通道之和为54+64+96+32=256,最后输出的张量尺寸为【35x35x256】。

第2个Inception module也有4个分支,与第1个模块类似,只是最后连接输出通道数为64的【1x1】卷积,最后输出的张量尺寸为【35x35x288】。

第3个模块与第2个模块一样。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

|

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],stride=1,padding='SAME'): # 35 x 35 x 256 end_point = 'Mixed_5b' with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net,depth(64),[1,1],scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv2d_0b_5x5') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(96), [3, 3],scope='Conv2d_0b_3x3') branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_3x3') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(32), [1, 1], scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3]) # 64+64+96+32=256 end_points[end_point] = net # 35 x 35 x 288 end_point = 'Mixed_5c' with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0b_1x1') branch_1 = slim.conv2d(branch_1, depth(64), [5, 5],scope='Conv_1_0c_5x5') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(64), [1, 1],scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(96), [3, 3],scope='Conv2d_0b_3x3') branch_2 = slim.conv2d(branch_2, depth(96), [3, 3],scope='Conv2d_0c_3x3') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3],scope='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(64), [1, 1],scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3]) end_points[end_point] = net # 35 x 35 x 288 end_point = 'Mixed_5d' with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(64), [5, 5],scope='Conv2d_0b_5x5') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(96), [3, 3],scope='Conv2d_0b_3x3') branch_2 = slim.conv2d(branch_2, depth(96), [3, 3],scope='Conv2d_0c_3x3') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(64), [1, 1],scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3]) end_points[end_point] = net |

第2个Inception模块组包含了5个Inception module,分别是Mixed_6a,Mixed_6b,Mixed_6ac,Mixed_6d,Mixed_6e。

每个Inception module包含有多个分支,第1个Inception module的步长为2,因此图片尺寸被压缩,最后输出的张量尺寸为【17x17x768】。

第2个Inception module采用了Fractorization into small convolutions思想,串联了【1x7】和【7x1】卷积,最后也是将多个通道合并。

第3、4个Inception module与第2个类似,都是用来增加卷积和非线性变化,提炼特征。张量尺寸不变,多个module后仍旧是【17x17x768】。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

|

# 17 x 17 x 768.end_point = 'Mixed_6a'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(384), [3, 3], stride=2,padding='VALID', scope='Conv2d_1a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(96), [3, 3],scope='Conv2d_0b_3x3') branch_1 = slim.conv2d(branch_1, depth(96), [3, 3], stride=2,padding='VALID', scope='Conv2d_1a_1x1') with tf.variable_scope('Branch_2'): branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID',scope='MaxPool_1a_3x3') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2]) # (35-3)/2+1=17end_points[end_point] = net# 17 x 17 x 768.end_point = 'Mixed_6b'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(128), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(128), [1, 7],scope='Conv2d_0b_1x7') branch_1 = slim.conv2d(branch_1, depth(192), [7, 1],scope='Conv2d_0c_7x1') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(128), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(128), [7, 1],scope='Conv2d_0b_7x1') branch_2 = slim.conv2d(branch_2, depth(128), [1, 7],scope='Conv2d_0c_1x7') branch_2 = slim.conv2d(branch_2, depth(128), [7, 1], scope='Conv2d_0d_7x1') branch_2 = slim.conv2d(branch_2, depth(192), [1, 7],scope='Conv2d_0e_1x7') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(192), [1, 1],scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])end_points[end_point] = netprint(net.shape)# 17 x 17 x 768.end_point = 'Mixed_6c'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): ranch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(160), [1, 7],scope='Conv2d_0b_1x7') branch_1 = slim.conv2d(branch_1, depth(192), [7, 1],scope='Conv2d_0c_7x1') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(160), [7, 1],scope='Conv2d_0b_7x1') branch_2 = slim.conv2d(branch_2, depth(160), [1, 7],scope='Conv2d_0c_1x7') branch_2 = slim.conv2d(branch_2, depth(160), [7, 1],scope='Conv2d_0d_7x1') branch_2 = slim.conv2d(branch_2, depth(192), [1, 7],scope='Conv2d_0e_1x7') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(192), [1, 1],scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])end_points[end_point] = net# 17 x 17 x 768.end_point = 'Mixed_6d'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(160), [1, 7], scope='Conv2d_0b_1x7') branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0b_7x1') branch_2 = slim.conv2d(branch_2, depth(160), [1, 7], scope='Conv2d_0c_1x7') branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0d_7x1') branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], sco e='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(192), [1, 1],scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])end_points[end_point] = net# 17 x 17 x 768.end_point = 'Mixed_6e'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(192), [1, 7], scope='Conv2d_0b_1x7') branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d(branch_2, depth(192), [7, 1], scope='Conv2d_0b_7x1') branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0c_1x7') branch_2 = slim.conv2d(branch_2, depth(192), [7, 1], scope='Conv2d_0d_7x1') branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7') with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])end_points[end_point] = net |

第3个Inception模块组包含了3个Inception module,分别是Mxied_7a,Mixed_7b,Mixed_7c。

第1个Inception module包含了3个分支,与上面的结构类似,主要也是通过改变通道数、卷积核尺寸,包括【1x1】、【3x3】、【1x7】、【7x1】来增加卷积和非线性变化,提升网络性能。

最后3个分支在输出通道上合并,输出张量的尺寸为【8 x 8 x 1280】。第3个Inception module后得到的张量尺寸为【8 x 8 x 2048】。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

|

# 8 x 8 x 1280.end_point = 'Mixed_7a'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') branch_0 = slim.conv2d(branch_0, depth(320), [3, 3], stride=2, padding='VALID', scope='Conv2d_1a_3x3') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') branch_1 = slim.conv2d(branch_1, depth(192), [1, 7], scope='Conv2d_0b_1x7') branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') branch_1 = slim.conv2d(branch_1, depth(192), [3, 3], stride=2, padding='VALID', scope='Conv2d_1a_3x3') with tf.variable_scope('Branch_2'): branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID', scope='MaxPool_1a_3x3') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2])end_points[end_point] = net# 8 x 8 x 2048.end_point = 'Mixed_7b'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(320), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(384), [1, 1], scope='Conv2d_0a_1x1') branch_1 = tf.concat(axis=3, values=[ slim.conv2d(branch_1, depth(384), [1, 3], scope='Conv2d_0b_1x3'), slim.conv2d(branch_1, depth(384), [3, 1], scope='Conv2d_0b_3x1')]) with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(448), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d( branch_2, depth(384), [3, 3], scope='Conv2d_0b_3x3') branch_2 = tf.concat(axis=3, values=[ slim.conv2d(branch_2, depth(384), [1, 3], scope='Conv2d_0c_1x3'), slim.conv2d(branch_2, depth(384), [3, 1], scope='Conv2d_0d_3x1')]) with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d( branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])end_points[end_point] = net)# 8 x 8 x 2048.end_point = 'Mixed_7c'with tf.variable_scope(end_point): with tf.variable_scope('Branch_0'): branch_0 = slim.conv2d(net, depth(320), [1, 1], scope='Conv2d_0a_1x1') with tf.variable_scope('Branch_1'): branch_1 = slim.conv2d(net, depth(384), [1, 1], scope='Conv2d_0a_1x1') branch_1 = tf.concat(axis=3, values=[ slim.conv2d(branch_1, depth(384), [1, 3], scope='Conv2d_0b_1x3'), slim.conv2d(branch_1, depth(384), [3, 1], scope='Conv2d_0c_3x1')]) with tf.variable_scope('Branch_2'): branch_2 = slim.conv2d(net, depth(448), [1, 1], scope='Conv2d_0a_1x1') branch_2 = slim.conv2d( branch_2, depth(384), [3, 3], scope='Conv2d_0b_3x3') branch_2 = tf.concat(axis=3, values=[ slim.conv2d(branch_2, depth(384), [1, 3], scope='Conv2d_0c_1x3'), slim.conv2d(branch_2, depth(384), [3, 1], scope='Conv2d_0d_3x1')]) with tf.variable_scope('Branch_3'): branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') branch_3 = slim.conv2d( branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') net = tf.concat(axis=3, values=[branch_0, branch_1, branch_2, branch_3])end_points[end_point] = net |

3 Auxiliary Logits、全局平均池化、Softmax分类

Inception V3网络的最后一部分是Auxiliary Logits、全局平均池化、Softmax分类。

首先是Auxiliary Logits,作为辅助分类的节点,对分类结果预测有很大帮助。

先通过end_points['Mixed_6e']得到Mixed_6e后的特征张量,之后接一个【5x5】的平均池化,步长为3,padding为VALID,张量尺寸从第2个模块组的【17x17x768】变为【5x5x768】。

接着连接一个输出通道为128的【1x1】卷积和输出通道为768的【5x5】卷积,输出尺寸变为【1x1x768】。

然后连接输出通道数为num_classes的【1x1】卷积,输出变为【1x1x1000】。最后将辅助分类节点的输出存储到字典表end_points中。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],stride=1,padding='SAME'): aux_logits = end_points['Mixed_6e'] print(aux_logits.shape) with tf.variable_scope('AuxLogits'): aux_logits = slim.avg_pool2d(aux_logits,[5,5],stride=3,padding='VALID',scope='AvgPool_1a_5x5') aux_logits = slim.conv2d(aux_logits,depth(128),[1,1],scope='Conv2d_1b_1x1') # (17-5)/3+1=5 kernel_size = _reduced_kernel_size_for_small_input(aux_logits, [5, 5]) aux_logits = slim.conv2d(aux_logits, depth(768), kernel_size, weights_initializer=trunc_normal(0.01), padding='VALID', scope='Conv2d_2a_{}x{}'.format(*kernel_size)) aux_logits = slim.conv2d( aux_logits, num_classes, [1, 1], activation_fn=None, normalizer_fn=None, weights_initializer=trunc_normal(0.001), scope='Conv2d_2b_1x1') aux_logits = tf.squeeze(aux_logits, [1, 2], name='SpatialSqueeze') end_points['AuxLogits'] = aux_logits |

最后对最后一个卷积层的输出Mixed_7c进行一个【8x8】的全局平均池化,padding为VALID,输出张量从【8 x 8 x 2048】变为【1 x 1 x 2048】,然后连接一个Dropout层,接着连接一个输出通道数为1000的【1x1】卷积。

使用tf.squeeze去掉输出张量中维数为1的维度。最后用Softmax得到最终分类结果。返回分类结果logits和包含各个卷积后的特征图字典表end_points。

|

1

2

3

4

5

6

7

8

9

10

11

|

with tf.variable_scope('Logits'): kernel_size = _reduced_kernel_size_for_small_input(net, [8, 8]) net = slim.avg_pool2d(net, kernel_size, padding='VALID',scope='AvgPool_1a_{}x{}'.format(*kernel_size)) end_points['AvgPool_1a'] = net net = slim.dropout(net, keep_prob=dropout_keep_prob, scope='Dropout_1b') end_points['PreLogits'] = net logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None, normalizer_fn=None, scope='Conv2d_1c_1x1') logits = tf.squeeze(logits, [1, 2], name='SpatialSqueeze') end_points['Logits'] = logits end_points['Predictions'] = slim.softmax(logits, scope='Predictions')return logits,end_points |

参考文献:

1. 《TensorFlow实战》

以上就是卷积神经Inception V3网络结构代码解析的详细内容,更多关于Inception V3卷积神经网络的资料请关注服务器之家其它相关文章!

原文链接:https://blog.csdn.net/fxfviolet/article/details/81608022