在进行编译视觉slam时,书中提到了orb、surf、sift提取方法,以及特征提取方法暴力匹配(brute-force matcher)和快速近邻匹配(flann)。以及7.9讲述的3d-3d:迭代最近点(iterative closest point,icp)方法,icp 的求解方式有两种:利用线性代数求解(主要是svd),以及利用非线性优化方式求解。

完整代码代码如下:

链接:https://pan.baidu.com/s/1rlh9jtg_awtuyzmphqij3q 提取码:8888

main.cpp

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

|

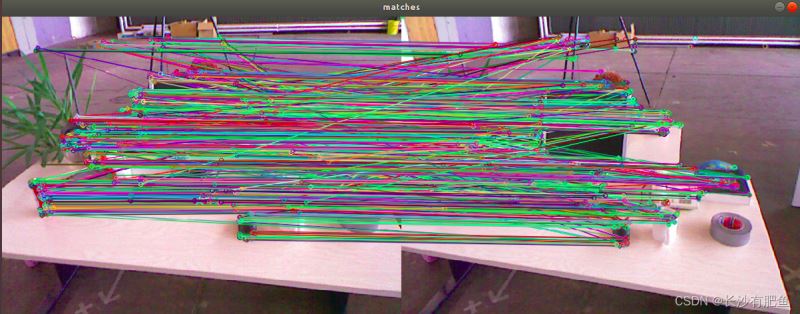

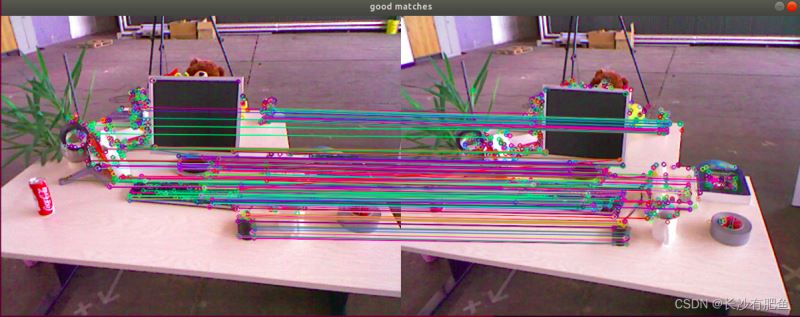

#include <iostream> #include "opencv2/opencv.hpp"#include "opencv2/core/core.hpp"#include "opencv2/features2d/features2d.hpp"#include "opencv2/highgui/highgui.hpp"#include <opencv2/xfeatures2d.hpp>#include <iostream>#include <vector>#include <time.h>#include <chrono>#include <math.h>#include<bits/stdc++.h> using namespace std;using namespace cv;using namespace cv::xfeatures2d; double picture1_size_change=1;double picture2_size_change=1; bool show_picture = true; void extract_orb2(string picture1, string picture2){ //-- 读取图像 mat img_1 = imread(picture1, cv_load_image_color); mat img_2 = imread(picture2, cv_load_image_color); assert(img_1.data != nullptr && img_2.data != nullptr); resize(img_1, img_1, size(), picture1_size_change, picture1_size_change); resize(img_2, img_2, size(), picture2_size_change, picture2_size_change); //-- 初始化 std::vector<keypoint> keypoints_1, keypoints_2; mat descriptors_1, descriptors_2; ptr<featuredetector> detector = orb::create(2000,(1.200000048f), 8, 100); ptr<descriptorextractor> descriptor = orb::create(5000); ptr<descriptormatcher> matcher = descriptormatcher::create("bruteforce-hamming"); //-- 第一步:检测 oriented fast 角点位置 chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); detector->detect(img_1, keypoints_1); detector->detect(img_2, keypoints_2); //-- 第二步:根据角点位置计算 brief 描述子 descriptor->compute(img_1, keypoints_1, descriptors_1); descriptor->compute(img_2, keypoints_2, descriptors_2); chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1); // cout << "extract orb cost = " << time_used.count() * 1000 << " ms " << endl; cout << "detect " << keypoints_1.size() << " and " << keypoints_2.size() << " keypoints " << endl; if (show_picture) { mat outimg1; drawkeypoints(img_1, keypoints_1, outimg1, scalar::all(-1), drawmatchesflags::default); imshow("orb features", outimg1); } //-- 第三步:对两幅图像中的brief描述子进行匹配,使用 hamming 距离 vector<dmatch> matches; // t1 = chrono::steady_clock::now(); matcher->match(descriptors_1, descriptors_2, matches); t2 = chrono::steady_clock::now(); time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1); cout << "extract and match orb cost = " << time_used.count() * 1000 << " ms " << endl; //-- 第四步:匹配点对筛选 // 计算最小距离和最大距离 auto min_max = minmax_element(matches.begin(), matches.end(), [](const dmatch &m1, const dmatch &m2) { return m1.distance < m2.distance; }); double min_dist = min_max.first->distance; double max_dist = min_max.second->distance; // printf("-- max dist : %f \n", max_dist); // printf("-- min dist : %f \n", min_dist); //当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限. std::vector<dmatch> good_matches; for (int i = 0; i < descriptors_1.rows; i++) { if (matches[i].distance <= max(2 * min_dist, 30.0)) { good_matches.push_back(matches[i]); } } cout << "match " << good_matches.size() << " keypoints " << endl; //-- 第五步:绘制匹配结果 mat img_match; mat img_goodmatch; drawmatches(img_1, keypoints_1, img_2, keypoints_2, matches, img_match); drawmatches(img_1, keypoints_1, img_2, keypoints_2, good_matches, img_goodmatch); if (show_picture) imshow("good matches", img_goodmatch); if (show_picture) waitkey(0);} void extract_sift(string picture1, string picture2){ // double t = (double)gettickcount(); mat temp = imread(picture1, imread_grayscale); mat image_check_changed = imread(picture2, imread_grayscale); if (!temp.data || !image_check_changed.data) { printf("could not load images...\n"); return; } resize(temp, temp, size(), picture1_size_change, picture1_size_change); resize(image_check_changed, image_check_changed, size(), picture2_size_change, picture2_size_change); //mat image_check_changed = change_image(image_check); //("temp", temp); if (show_picture) imshow("image_check_changed", image_check_changed); int minhessian = 500; // ptr<surf> detector = surf::create(minhessian); // surf ptr<sift> detector = sift::create(); // sift vector<keypoint> keypoints_obj; vector<keypoint> keypoints_scene; mat descriptor_obj, descriptor_scene; clock_t starttime, endtime; starttime = clock(); chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); // cout << "extract orb cost = " << time_used.count() * 1000 << " ms " << endl; detector->detectandcompute(temp, mat(), keypoints_obj, descriptor_obj); detector->detectandcompute(image_check_changed, mat(), keypoints_scene, descriptor_scene); cout << "detect " << keypoints_obj.size() << " and " << keypoints_scene.size() << " keypoints " << endl; // matching flannbasedmatcher matcher; vector<dmatch> matches; matcher.match(descriptor_obj, descriptor_scene, matches); chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1); cout << "extract and match cost = " << time_used.count() * 1000 << " ms " << endl; //求最小最大距离 double mindist = 1000; double maxdist = 0; //row--行 col--列 for (int i = 0; i < descriptor_obj.rows; i++) { double dist = matches[i].distance; if (dist > maxdist) { maxdist = dist; } if (dist < mindist) { mindist = dist; } } // printf("max distance : %f\n", maxdist); // printf("min distance : %f\n", mindist); // find good matched points vector<dmatch> goodmatches; for (int i = 0; i < descriptor_obj.rows; i++) { double dist = matches[i].distance; if (dist < max(5 * mindist, 1.0)) { goodmatches.push_back(matches[i]); } } //rectangle(temp, point(1, 1), point(177, 157), scalar(0, 0, 255), 8, 0); cout << "match " << goodmatches.size() << " keypoints " << endl; endtime = clock(); // cout << "took time : " << (double)(endtime - starttime) / clocks_per_sec * 1000 << " ms" << endl; mat matchesimg; drawmatches(temp, keypoints_obj, image_check_changed, keypoints_scene, goodmatches, matchesimg, scalar::all(-1), scalar::all(-1), vector<char>(), drawmatchesflags::not_draw_single_points); if (show_picture) imshow("flann matching result01", matchesimg); // imwrite("c:/users/administrator/desktop/matchesimg04.jpg", matchesimg); //求h std::vector<point2f> points1, points2; //保存对应点 for (size_t i = 0; i < goodmatches.size(); i++) { //queryidx是对齐图像的描述子和特征点的下标。 points1.push_back(keypoints_obj[goodmatches[i].queryidx].pt); //queryidx是是样本图像的描述子和特征点的下标。 points2.push_back(keypoints_scene[goodmatches[i].trainidx].pt); } // find homography 计算homography,ransac随机抽样一致性算法 mat h = findhomography(points1, points2, ransac); //imwrite("c:/users/administrator/desktop/c-train/c-train/result/sift/image4_surf_minhessian1000_ mindist1000_a0.9b70.jpg", matchesimg); vector<point2f> obj_corners(4); vector<point2f> scene_corners(4); obj_corners[0] = point(0, 0); obj_corners[1] = point(temp.cols, 0); obj_corners[2] = point(temp.cols, temp.rows); obj_corners[3] = point(0, temp.rows); //透视变换(把斜的图片扶正) perspectivetransform(obj_corners, scene_corners, h); //mat dst; cvtcolor(image_check_changed, image_check_changed, color_gray2bgr); line(image_check_changed, scene_corners[0], scene_corners[1], scalar(0, 0, 255), 2, 8, 0); line(image_check_changed, scene_corners[1], scene_corners[2], scalar(0, 0, 255), 2, 8, 0); line(image_check_changed, scene_corners[2], scene_corners[3], scalar(0, 0, 255), 2, 8, 0); line(image_check_changed, scene_corners[3], scene_corners[0], scalar(0, 0, 255), 2, 8, 0); if (show_picture) { mat outimg1; mat temp_color = imread(picture1, cv_load_image_color); drawkeypoints(temp_color, keypoints_obj, outimg1, scalar::all(-1), drawmatchesflags::default); imshow("sift features", outimg1); } if (show_picture) imshow("draw object", image_check_changed); // imwrite("c:/users/administrator/desktop/image04.jpg", image_check_changed); // t = ((double)gettickcount() - t) / gettickfrequency(); // printf("averagetime:%f\n", t); if (show_picture) waitkey(0);} void extract_surf(string picture1, string picture2){ // double t = (double)gettickcount(); mat temp = imread(picture1, imread_grayscale); mat image_check_changed = imread(picture2, imread_grayscale); if (!temp.data || !image_check_changed.data) { printf("could not load images...\n"); return; } resize(temp, temp, size(), picture1_size_change, picture1_size_change); resize(image_check_changed, image_check_changed, size(), picture2_size_change, picture2_size_change); //mat image_check_changed = change_image(image_check); //("temp", temp); if (show_picture) imshow("image_check_changed", image_check_changed); int minhessian = 500; ptr<surf> detector = surf::create(minhessian); // surf // ptr<sift> detector = sift::create(minhessian); // sift vector<keypoint> keypoints_obj; vector<keypoint> keypoints_scene; mat descriptor_obj, descriptor_scene; clock_t starttime, endtime; starttime = clock(); chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); // cout << "extract orb cost = " << time_used.count() * 1000 << " ms " << endl; detector->detectandcompute(temp, mat(), keypoints_obj, descriptor_obj); detector->detectandcompute(image_check_changed, mat(), keypoints_scene, descriptor_scene); cout << "detect " << keypoints_obj.size() << " and " << keypoints_scene.size() << " keypoints " << endl; // matching flannbasedmatcher matcher; vector<dmatch> matches; matcher.match(descriptor_obj, descriptor_scene, matches); chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1); cout << "extract and match cost = " << time_used.count() * 1000 << " ms " << endl; //求最小最大距离 double mindist = 1000; double maxdist = 0; //row--行 col--列 for (int i = 0; i < descriptor_obj.rows; i++) { double dist = matches[i].distance; if (dist > maxdist) { maxdist = dist; } if (dist < mindist) { mindist = dist; } } // printf("max distance : %f\n", maxdist); // printf("min distance : %f\n", mindist); // find good matched points vector<dmatch> goodmatches; for (int i = 0; i < descriptor_obj.rows; i++) { double dist = matches[i].distance; if (dist < max(2 * mindist, 0.15)) { goodmatches.push_back(matches[i]); } } //rectangle(temp, point(1, 1), point(177, 157), scalar(0, 0, 255), 8, 0); cout << "match " << goodmatches.size() << " keypoints " << endl; endtime = clock(); // cout << "took time : " << (double)(endtime - starttime) / clocks_per_sec * 1000 << " ms" << endl; mat matchesimg; drawmatches(temp, keypoints_obj, image_check_changed, keypoints_scene, goodmatches, matchesimg, scalar::all(-1), scalar::all(-1), vector<char>(), drawmatchesflags::not_draw_single_points); if (show_picture) imshow("flann matching result01", matchesimg); // imwrite("c:/users/administrator/desktop/matchesimg04.jpg", matchesimg); //求h std::vector<point2f> points1, points2; //保存对应点 for (size_t i = 0; i < goodmatches.size(); i++) { //queryidx是对齐图像的描述子和特征点的下标。 points1.push_back(keypoints_obj[goodmatches[i].queryidx].pt); //queryidx是是样本图像的描述子和特征点的下标。 points2.push_back(keypoints_scene[goodmatches[i].trainidx].pt); } // find homography 计算homography,ransac随机抽样一致性算法 mat h = findhomography(points1, points2, ransac); //imwrite("c:/users/administrator/desktop/c-train/c-train/result/sift/image4_surf_minhessian1000_ mindist1000_a0.9b70.jpg", matchesimg); vector<point2f> obj_corners(4); vector<point2f> scene_corners(4); obj_corners[0] = point(0, 0); obj_corners[1] = point(temp.cols, 0); obj_corners[2] = point(temp.cols, temp.rows); obj_corners[3] = point(0, temp.rows); //透视变换(把斜的图片扶正) perspectivetransform(obj_corners, scene_corners, h); //mat dst; cvtcolor(image_check_changed, image_check_changed, color_gray2bgr); line(image_check_changed, scene_corners[0], scene_corners[1], scalar(0, 0, 255), 2, 8, 0); line(image_check_changed, scene_corners[1], scene_corners[2], scalar(0, 0, 255), 2, 8, 0); line(image_check_changed, scene_corners[2], scene_corners[3], scalar(0, 0, 255), 2, 8, 0); line(image_check_changed, scene_corners[3], scene_corners[0], scalar(0, 0, 255), 2, 8, 0); if (show_picture) { mat outimg1; mat temp_color = imread(picture1, cv_load_image_color); drawkeypoints(temp_color, keypoints_obj, outimg1, scalar::all(-1), drawmatchesflags::default); imshow("surf features", outimg1); } if (show_picture) imshow("draw object", image_check_changed); // imwrite("c:/users/administrator/desktop/image04.jpg", image_check_changed); // t = ((double)gettickcount() - t) / gettickfrequency(); // printf("averagetime:%f\n", t); if (show_picture) waitkey(0);}void extract_akaze(string picture1,string picture2){ //读取图片 mat temp = imread(picture1,imread_grayscale); mat image_check_changed = imread(picture2,imread_grayscale); //如果不能读到其中任何一张图片,则打印不能下载图片 if(!temp.data || !image_check_changed.data) { printf("could not load iamges...\n"); return; } resize(temp,temp,size(),picture1_size_change,picture1_size_change); resize(image_check_changed,image_check_changed,size(),picture2_size_change,picture2_size_change); //mat image_check_changed = change_image(image_check); //("temp", temp); if(show_picture) { imshow("image_checked_changed",image_check_changed); } int minhessian=500; ptr<akaze> detector=akaze::create();//akaze vector<keypoint> keypoints_obj; vector<keypoint> keypoints_scene; mat descriptor_obj,descriptor_scene; clock_t starttime,endtime; starttime=clock(); chrono::steady_clock::time_point t1=chrono::steady_clock::now(); detector->detectandcompute(temp,mat(),keypoints_obj,descriptor_obj); detector->detectandcompute(image_check_changed,mat(),keypoints_scene,descriptor_scene); cout<<" detect "<<keypoints_obj.size()<<" and "<<keypoints_scene.size<<" keypoints "<<endl; //matching flannbasedmatcher matcher; vector<dmatch> matches; matcher.match(descriptor_obj,descriptor_scene,matches); chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2-t1); cout << "extract and match cost = " << time_used.count()*1000<<" ms "<<endl; //求最小最大距离 double mindist = 1000; double max_dist = 0; //row--行 col--列 for(int i=0;i<descriptor_obj.rows;i++) { double dist = match[i].distance; if(dist > maxdist) { maxdist = dist; } if(dist<mindist) { mindist = dist; } } // printf("max distance : %f\n", maxdist); // printf("min distance : %f\n", mindist); // find good matched points vector<dmatch> goodmatches; for(imt i=0;i<descriptor_obj.rows;i++) { double dist = matches[i].distance; if(dist < max(5 * mindist,1.0)) { goodmatches.push_back(matches[i]); } } //rectangle(temp, point(1, 1), point(177, 157), scalar(0, 0, 255), 8, 0); cout<<" match "<<goodmatches.size()<<" keypoints "<<endl; endtime = clock(); // cout << "took time : " << (double)(endtime - starttime) / clocks_per_sec * 1000 << " ms" << endl; mat matchesimg; drawmatches(temp,keypoints_obj,image_check_changed,keypoints_scene,goodmatches, matchesimg,scalar::all(-1), scalar::all(-1),vector<char>(),drawmatchesflags::not_draw_single_points); if(show_picture) imshow("flann matching result01",matchesimg); // imwrite("c:/users/administrator/desktop/matchesimg04.jpg", matchesimg); //求h std::vector<point2f> points1,points2; //保存对应点 for(size_t i = 0;i < goodmatches.size();i++) { //queryidx是对齐图像的描述子和特征点的下标。 points1.push_back(keypoints_obj[goodmatches[i].queryidx].pt); //queryidx是是样本图像的描述子和特征点的下标。 points2.push_back(keypoints_scene[goodmatches[i].trainidx].pt); } // find homography 计算homography,ransac随机抽样一致性算法 mat h = findhomography(points1,points2,ransac); //imwrite("c:/users/administrator/desktop/c-train/c-train/result/sift/image4_surf_minhessian1000_ mindist1000_a0.9b70.jpg", matchesimg); vector<point2f> obj_corners(4); vector<point2f> scene_corners(4); obj_corners[0] = point(0,0); obj_corners[0] = point(temp.count,0); obj_corners[0] = point(temp.cols,temp.rows); obj_corners[0] = point(0,temp.rows); //透视变换(把斜的图片扶正) perspectivetransform(obj_corners,scene_corners,h); //mat dst cvtcolor(image_check_changed,image_check_changed,color_gray2bgr); line(image_check_changed,scene_corners[0],scene_corners[1],scalar(0,0,255),2,8,0); line(image_check_changed,scene_corners[1],scene_corners[2],scalar(0,0,255),2,8,0); line(image_check_changed,scene_corners[2],scene_corners[3],scalar(0,0,255),2,8,0); line(image_check_changed,scene_corners[3],scene_corners[0],scalar(0,0,255),2,8,0); if(show_picture) { mat outimg1; mat temp_color = imread(picture1,cv_load_image_color); drawkeypoints(temp_color,keypoints_obj,outimg1,scalar::all(-1),drawmatchesflags::default); imshow("akaze features",outimg1); } if(show_picture) waitkey(0);} void extract_orb(string picture1, string picture2){ mat img_1 = imread(picture1); mat img_2 = imread(picture2); resize(img_1, img_1, size(), picture1_size_change, picture1_size_change); resize(img_2, img_2, size(), picture2_size_change, picture2_size_change); if (!img_1.data || !img_2.data) { cout << "error reading images " << endl; return ; } vector<point2f> recognized; vector<point2f> scene; recognized.resize(1000); scene.resize(1000); mat d_srcl, d_srcr; mat img_matches, des_l, des_r; //orb算法的目标必须是灰度图像 cvtcolor(img_1, d_srcl, color_bgr2gray);//cpu版的orb算法源码中自带对输入图像灰度化,此步可省略 cvtcolor(img_2, d_srcr, color_bgr2gray); ptr<orb> d_orb = orb::create(1500); mat d_descriptorsl, d_descriptorsr, d_descriptorsl_32f, d_descriptorsr_32f; vector<keypoint> keypoints_1, keypoints_2; //设置关键点间的匹配方式为norm_l2,更建议使用 flannbased = 1, bruteforce = 2, bruteforce_l1 = 3, bruteforce_hamming = 4, bruteforce_hamminglut = 5, bruteforce_sl2 = 6 ptr<descriptormatcher> d_matcher = descriptormatcher::create(norm_l2); std::vector<dmatch> matches;//普通匹配 std::vector<dmatch> good_matches;//通过keypoint之间距离筛选匹配度高的匹配结果 clock_t starttime, endtime; starttime = clock(); chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); d_orb -> detectandcompute(d_srcl, mat(), keypoints_1, d_descriptorsl); d_orb -> detectandcompute(d_srcr, mat(), keypoints_2, d_descriptorsr); cout << "detect " << keypoints_1.size() << " and " << keypoints_2.size() << " keypoints " << endl; // endtime = clock(); // cout << "took time : " << (double)(endtime - starttime) / clocks_per_sec * 1000 << " ms" << endl; d_matcher -> match(d_descriptorsl, d_descriptorsr, matches);//l、r表示左右两幅图像进行匹配 //计算匹配所需时间 chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1); cout << "extract and match cost = " << time_used.count() * 1000 << " ms " << endl; int sz = matches.size(); double max_dist = 0; double min_dist = 100; for (int i = 0; i < sz; i++) { double dist = matches[i].distance; if (dist < min_dist) min_dist = dist; if (dist > max_dist) max_dist = dist; } for (int i = 0; i < sz; i++) { if (matches[i].distance < 0.6*max_dist) { good_matches.push_back(matches[i]); } } cout << "match " << good_matches.size() << " keypoints " << endl; // endtime = clock(); // cout << "took time : " << (double)(endtime - starttime) / clocks_per_sec * 1000 << " ms" << endl; //提取良好匹配结果中在待测图片上的点集,确定匹配的大概位置 for (size_t i = 0; i < good_matches.size(); ++i) { scene.push_back(keypoints_2[ good_matches[i].trainidx ].pt); } for(unsigned int j = 0; j < scene.size(); j++) cv::circle(img_2, scene[j], 2, cv::scalar(0, 255, 0), 2); //画出普通匹配结果 mat showmatches; drawmatches(img_1,keypoints_1,img_2,keypoints_2,matches,showmatches); if (show_picture) imshow("matches", showmatches); // imwrite("matches.png", showmatches); //画出良好匹配结果 mat showgoodmatches; drawmatches(img_1,keypoints_1,img_2,keypoints_2,good_matches,showgoodmatches); if (show_picture) imshow("good_matches", showgoodmatches); // imwrite("good_matches.png", showgoodmatches); //画出良好匹配结果中在待测图片上的点集 if (show_picture) imshow("matchpoints_in_img_2", img_2); // imwrite("matchpoints_in_img_2.png", img_2); if (show_picture) waitkey(0);} int main(int argc, char **argv){ string picture1=string(argv[1]); string picture2=string(argv[2]); // string picture1 = "data/picture1/6.jpg"; // string picture2 = "data/picture2/16.png"; cout << "\nextract_orb::" << endl; extract_orb(picture1, picture2); cout << "\nextract_orb::" << endl; extract_orb2(picture1, picture2); cout << "\nextract_surf::" << endl; extract_surf(picture1, picture2); cout << "\nextract_akaze::" << endl; extract_akaze(picture1, picture2); cout << "\nextract_sift::" << endl; extract_sift(picture1, picture2); cout << "success!!" << endl;} |

cmakelists.txt

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

cmake_minimum_required(version 2.8.3) # 设定版本project(descriptorcompare) # 设定工程名set(cmake_cxx_compiler "g++") # 设定编译器add_compile_options(-std=c++14) #编译选项,选择c++版本 # 设定可执行二进制文件的目录(最后生成的可执行文件放置的目录)set(executable_output_path ${project_source_dir}) set(cmake_cxx_flags "${cmake_cxx_flags} -wall -fpermissive -g -o3 -wno-unused-function -wno-return-type") find_package(opencv 3.0 required) message(status "using opencv version ${opencv_version}")find_package(eigen3 3.3.8 required)find_package(pangolin required) # 设定链接目录link_directories(${project_source_dir}/lib) # 设定头文件目录include_directories( ${project_source_dir}/include ${eigen3_include_dir} ${opencv_include_dir} ${pangolin_include_dirs} ) add_library(${project_name}test.cc) target_link_libraries( ${project_name}${opencv_libs}${eigen3_libs}${pangolin_libraries} ) add_executable(main main.cpp )target_link_libraries(main ${project_name} ) add_executable(icp icp.cpp )target_link_libraries(icp ${project_name} ) |

执行效果

|

1

|

./main 1.png 2.png |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

extract_orb::detect 1500 and 1500 keypoints extract and match cost = 21.5506 ms match 903 keypoints extract_orb::detect 1304 and 1301 keypoints extract and match orb cost = 25.4976 ms match 313 keypoints extract_surf::detect 915 and 940 keypoints extract and match cost = 53.8371 ms match 255 keypoints extract_sift::detect 1536 and 1433 keypoints extract and match cost = 97.9322 ms match 213 keypoints success!! |

icp

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

|

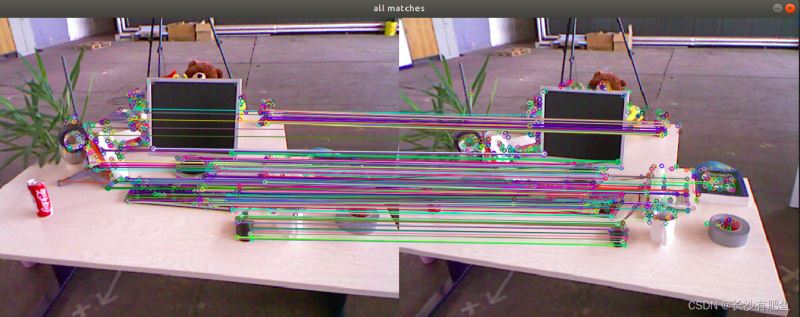

#include <iostream>#include <opencv2/core/core.hpp>#include <opencv2/features2d/features2d.hpp>#include <opencv2/highgui/highgui.hpp>#include <opencv2/calib3d/calib3d.hpp>#include <eigen/core>#include <eigen/dense>#include <eigen/geometry>#include <eigen/svd> #include <pangolin/pangolin.h>#include <chrono> using namespace std;using namespace cv; int picture_h=480;int picture_w=640; bool show_picture = true; void find_feature_matches( const mat &img_1, const mat &img_2, std::vector<keypoint> &keypoints_1, std::vector<keypoint> &keypoints_2, std::vector<dmatch> &matches); // 像素坐标转相机归一化坐标point2d pixel2cam(const point2d &p, const mat &k); void pose_estimation_3d3d( const vector<point3f> &pts1, const vector<point3f> &pts2, mat &r, mat &t); int main(int argc, char **argv) { if (argc != 5) { cout << "usage: pose_estimation_3d3d img1 img2 depth1 depth2" << endl; return 1; } //-- 读取图像 mat img_1 = imread(argv[1], cv_load_image_color); mat img_2 = imread(argv[2], cv_load_image_color); vector<keypoint> keypoints_1, keypoints_2; vector<dmatch> matches; find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches); cout << "picture1 keypoints: " << keypoints_1.size() << " \npicture2 keypoints: " << keypoints_2.size() << endl; cout << "一共找到了 " << matches.size() << " 组匹配点" << endl; // 建立3d点 mat depth1 = imread(argv[3], cv_8uc1); // 深度图为16位无符号数,单通道图像 mat depth2 = imread(argv[4], cv_8uc1); // 深度图为16位无符号数,单通道图像 mat k = (mat_<double>(3, 3) << 595.2, 0, 328.9, 0, 599.0, 253.9, 0, 0, 1); vector<point3f> pts1, pts2; for (dmatch m:matches) { int d1 = 255-(int)depth1.ptr<uchar>(int(keypoints_1[m.queryidx].pt.y))[int(keypoints_1[m.queryidx].pt.x)]; int d2 = 255-(int)depth2.ptr<uchar>(int(keypoints_2[m.trainidx].pt.y))[int(keypoints_2[m.trainidx].pt.x)]; if (d1 == 0 || d2 == 0) // bad depth continue; point2d p1 = pixel2cam(keypoints_1[m.queryidx].pt, k); point2d p2 = pixel2cam(keypoints_2[m.trainidx].pt, k); float dd1 = int(d1) / 1000.0; float dd2 = int(d2) / 1000.0; pts1.push_back(point3f(p1.x * dd1, p1.y * dd1, dd1)); pts2.push_back(point3f(p2.x * dd2, p2.y * dd2, dd2)); } cout << "3d-3d pairs: " << pts1.size() << endl; mat r, t; pose_estimation_3d3d(pts1, pts2, r, t); //dzq add cv::mat pose = (mat_<double>(4, 4) << r.at<double>(0, 0), r.at<double>(0, 1), r.at<double>(0, 2), t.at<double>(0), r.at<double>(1, 0), r.at<double>(1, 1), r.at<double>(1, 2), t.at<double>(1), r.at<double>(2, 0), r.at<double>(2, 1), r.at<double>(2, 2), t.at<double>(2), 0, 0, 0, 1); cout << "[delete outliers] matched objects distance: "; vector<double> vdistance; double alldistance = 0; //存储总距离,用来求平均匹配距离,用平均的误差距离来剔除外点 for (int i = 0; i < pts1.size(); i++) { mat point = pose * (mat_<double>(4, 1) << pts2[i].x, pts2[i].y, pts2[i].z, 1); double distance = pow(pow(pts1[i].x - point.at<double>(0), 2) + pow(pts1[i].y - point.at<double>(1), 2) + pow(pts1[i].z - point.at<double>(2), 2), 0.5); vdistance.push_back(distance); alldistance += distance; // cout << distance << " "; } // cout << endl; double avgdistance = alldistance / pts1.size(); //求一个平均距离 int n_outliers = 0; for (int i = 0, j = 0; i < pts1.size(); i++, j++) //i用来记录剔除后vector遍历的位置,j用来记录原位置 { if (vdistance[i] > 1.5 * avgdistance) //匹配物体超过平均距离的n倍就会被剔除 [delete outliers] dzq fixed_param { n_outliers++; } } cout << "n_outliers:: " << n_outliers << endl; // show points { //创建一个窗口 pangolin::createwindowandbind("show points", 640, 480); //启动深度测试 glenable(gl_depth_test); // define projection and initial modelview matrix pangolin::openglrenderstate s_cam( pangolin::projectionmatrix(640, 480, 420, 420, 320, 240, 0.05, 500), //对应的是glulookat,摄像机位置,参考点位置,up vector(上向量) pangolin::modelviewlookat(0, -5, 0.1, 0, 0, 0, pangolin::axisy)); // create interactive view in window pangolin::handler3d handler(s_cam); //setbounds 跟opengl的viewport 有关 //看simpledisplay中边界的设置就知道 pangolin::view &d_cam = pangolin::createdisplay() .setbounds(0.0, 1.0, 0.0, 1.0, -640.0f / 480.0f) .sethandler(&handler); while (!pangolin::shouldquit()) { // clear screen and activate view to render into glclearcolor(0.97,0.97,1.0, 1); //背景色 glclear(gl_color_buffer_bit | gl_depth_buffer_bit); d_cam.activate(s_cam); glbegin(gl_points); //绘制匹配点 gllinewidth(5); for (int i = 0; i < pts1.size(); i++) { glcolor3f(1, 0, 0); glvertex3d(pts1[i].x,pts1[i].y,pts1[i].z); mat point = pose * (mat_<double>(4, 1) << pts2[i].x, pts2[i].y, pts2[i].z, 1); glcolor3f(0, 1, 0); glvertex3d(point.at<double>(0),point.at<double>(1),point.at<double>(2)); } glend(); glbegin(gl_lines); //绘制匹配线 gllinewidth(1); for (int i = 0; i < pts1.size(); i++) { glcolor3f(0, 0, 1); glvertex3d(pts1[i].x,pts1[i].y,pts1[i].z); mat point = pose * (mat_<double>(4, 1) << pts2[i].x, pts2[i].y, pts2[i].z, 1); glvertex3d(point.at<double>(0),point.at<double>(1),point.at<double>(2)); } glend(); glbegin(gl_points); //绘制所有点 gllinewidth(5); glcolor3f(1, 0.5, 0); for (int i = 0; i < picture_h; i+=2) { for (int j = 0; j < picture_w; j+=2) { int d1 = 255-(int)depth1.ptr<uchar>(i)[j]; if (d1 == 0) // bad depth continue; point2d temp_p; temp_p.y=i; //这里的x和y应该和i j相反 temp_p.x=j; point2d p1 = pixel2cam(temp_p, k); float dd1 = int(d1) / 1000.0; glvertex3d(p1.x * dd1, p1.y * dd1, dd1); // glvertex3d(j/1000.0, i/1000.0, d1/200.0); } } glend(); // swap frames and process events pangolin::finishframe(); } }} void find_feature_matches(const mat &img_1, const mat &img_2, std::vector<keypoint> &keypoints_1, std::vector<keypoint> &keypoints_2, std::vector<dmatch> &matches) { //-- 初始化 mat descriptors_1, descriptors_2; // used in opencv3 ptr<featuredetector> detector = orb::create(2000,(1.200000048f), 8, 100); ptr<descriptorextractor> descriptor = orb::create(5000); ptr<descriptormatcher> matcher = descriptormatcher::create("bruteforce-hamming"); //-- 第一步:检测 oriented fast 角点位置 detector->detect(img_1, keypoints_1); detector->detect(img_2, keypoints_2); //-- 第二步:根据角点位置计算 brief 描述子 descriptor->compute(img_1, keypoints_1, descriptors_1); descriptor->compute(img_2, keypoints_2, descriptors_2); //-- 第三步:对两幅图像中的brief描述子进行匹配,使用 hamming 距离 vector<dmatch> match; // bfmatcher matcher ( norm_hamming ); matcher->match(descriptors_1, descriptors_2, match); //-- 第四步:匹配点对筛选 double min_dist = 10000, max_dist = 0; //找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离 for (int i = 0; i < descriptors_1.rows; i++) { double dist = match[i].distance; if (dist < min_dist) min_dist = dist; if (dist > max_dist) max_dist = dist; } printf("-- max dist : %f \n", max_dist); printf("-- min dist : %f \n", min_dist); //当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限. for (int i = 0; i < descriptors_1.rows; i++) { if (match[i].distance <= max(2 * min_dist, 30.0)) { matches.push_back(match[i]); } } //-- 第五步:绘制匹配结果 if(show_picture) { mat img_match; mat img_goodmatch; drawmatches(img_1, keypoints_1, img_2, keypoints_2, matches, img_match); imshow("all matches", img_match); waitkey(0); }} point2d pixel2cam(const point2d &p, const mat &k) { return point2d( (p.x - k.at<double>(0, 2)) / k.at<double>(0, 0), (p.y - k.at<double>(1, 2)) / k.at<double>(1, 1) );} void pose_estimation_3d3d(const vector<point3f> &pts1, const vector<point3f> &pts2, mat &r, mat &t) { point3f p1, p2; // center of mass int n = pts1.size(); for (int i = 0; i < n; i++) { p1 += pts1[i]; p2 += pts2[i]; } p1 = point3f(vec3f(p1) / n); p2 = point3f(vec3f(p2) / n); vector<point3f> q1(n), q2(n); // remove the center for (int i = 0; i < n; i++) { q1[i] = pts1[i] - p1; q2[i] = pts2[i] - p2; } // compute q1*q2^t eigen::matrix3d w = eigen::matrix3d::zero(); for (int i = 0; i < n; i++) { w += eigen::vector3d(q1[i].x, q1[i].y, q1[i].z) * eigen::vector3d(q2[i].x, q2[i].y, q2[i].z).transpose(); } // cout << "w=" << w << endl; // svd on w eigen::jacobisvd<eigen::matrix3d> svd(w, eigen::computefullu | eigen::computefullv); eigen::matrix3d u = svd.matrixu(); eigen::matrix3d v = svd.matrixv(); eigen::matrix3d r_ = u * (v.transpose()); if (r_.determinant() < 0) { r_ = -r_; } eigen::vector3d t_ = eigen::vector3d(p1.x, p1.y, p1.z) - r_ * eigen::vector3d(p2.x, p2.y, p2.z); // convert to cv::mat r = (mat_<double>(3, 3) << r_(0, 0), r_(0, 1), r_(0, 2), r_(1, 0), r_(1, 1), r_(1, 2), r_(2, 0), r_(2, 1), r_(2, 2) ); t = (mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));} void convertrgb2gray(string picture){ double min; double max; mat depth_new_1 = imread(picture); // 深度图为16位无符号数,单通道图像 mat test=mat(20,256,cv_8uc3); int s; for (int i = 0; i < 20; i++) { std::cout<<i<<" "; vec3b* p = test.ptr<vec3b>(i); for (s = 0; s < 32; s++) { p[s][0] = 128 + 4 * s; p[s][1] = 0; p[s][2] = 0; } p[32][0] = 255; p[32][1] = 0; p[32][2] = 0; for (s = 0; s < 63; s++) { p[33+s][0] = 255; p[33+s][1] = 4+4*s; p[33+s][2] = 0; } p[96][0] = 254; p[96][1] = 255; p[96][2] = 2; for (s = 0; s < 62; s++) { p[97 + s][0] = 250 - 4 * s; p[97 + s][1] = 255; p[97 + s][2] = 6+4*s; } p[159][0] = 1; p[159][1] = 255; p[159][2] = 254; for (s = 0; s < 64; s++) { p[160 + s][0] = 0; p[160 + s][1] = 252 - (s * 4); p[160 + s][2] = 255; } for (s = 0; s < 32; s++) { p[224 + s][0] = 0; p[224 + s][1] = 0; p[224 + s][2] = 252-4*s; } } cout<<"depth_new_1 :: "<<depth_new_1.cols<<" "<<depth_new_1.rows<<" "<<endl; mat img_g=mat(picture_h,picture_w,cv_8uc1);for(int i=0;i<picture_h;i++){ vec3b *p = test.ptr<vec3b>(0); vec3b *q = depth_new_1.ptr<vec3b>(i); for (int j = 0; j < picture_w; j++) { for(int k=0;k<256;k++) { if ( (((int)p[k][0] - (int)q[j][0] < 4) && ((int)q[j][0] - (int)p[k][0] < 4))&& (((int)p[k][1] - (int)q[j][1] < 4) && ((int)q[j][1] - (int)p[k][1] < 4))&& (((int)p[k][2] - (int)q[j][2] < 4) && ((int)q[j][2] - (int)p[k][2] < 4))) { img_g.at<uchar>(i,j)=k; } } }} imwrite("14_depth_3.png", img_g); waitkey(); } |

cmakelists.txt

和上面一样。

|

1

|

./icp 1.png 2.png 1_depth.png 2_depth.png |

|

1

2

3

4

5

6

7

|

-- max dist : 87.000000 -- min dist : 4.000000 picture1 keypoints: 1304 picture2 keypoints: 1301一共找到了 313 组匹配点3d-3d pairs: 313[delete outliers] matched objects distance: n_outliers:: 23 |

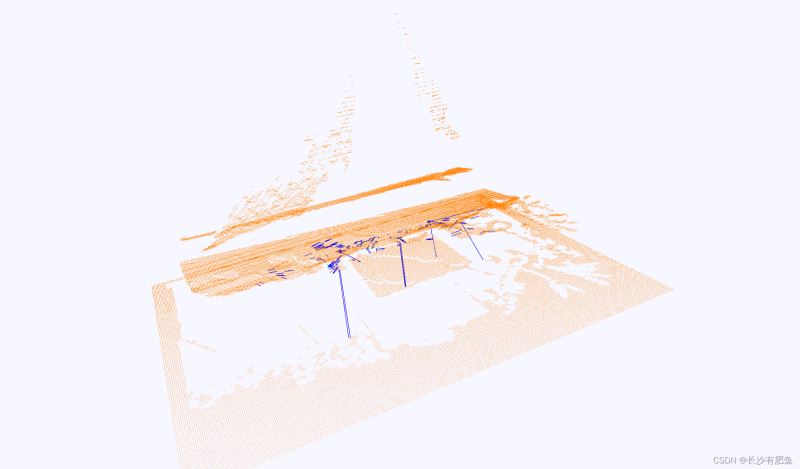

执行效果

以上就是浅析orb、surf、sift特征点提取方法以及icp匹配方法的详细内容,更多关于特征点提取方法 icp匹配方法的资料请关注服务器之家其它相关文章!

原文链接:https://blog.csdn.net/weixin_53660567/article/details/122096075