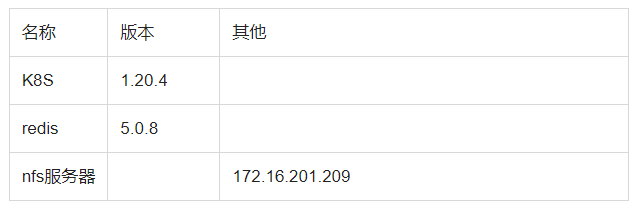

环境:

背景: 采用NFS存储卷的方式 持久化存储redis 需要保存的文件

一、部署NFS服务器

- #服务器安装nfs服务,提供nfs存储功能

- 1、安装nfs-utils

- yuminstallnfs-utils(centos)

- 或者apt-getinstallnfs-kernel-server(ubuntu)

- 2、启动服务

- systemctlenablenfs-server

- systemctlstartnfs-server

- 3、创建共享目录完成共享配置

- mkdir/home/nfs#创建共享目录

- 4、编辑共享配置

- vim/etc/exports

- #语法格式:共享文件路径客户机地址(权限)#这里的客户机地址可以是IP,网段,域名,也可以是任意*

- /home/nfs*(rw,async,no_root_squash)

- 服务自检命令

- exportfs-arv

- 5、重启服务

- systemctlrestartnfs-server

- 6、本机查看nfs共享目录

- #showmount-e服务器IP地址(如果提示命令不存在,则需要yuminstallshowmount)

- showmount-e127.0.0.1

- /home/nfs*

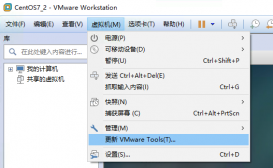

- 7、客户端模拟挂载[所有k8s的节点都需要安装客户端]

- [root@master-1~]#yuminstallnfs-utils(centos)

- 或者apt-getinstallnfs-common(ubuntu)

- [root@master-1~]#mkdir/test

- [root@master-1~]#mount-tnfs172.16.201.209:/home/nfs/test

- #取消挂载

- [root@master-1~]#umount/test

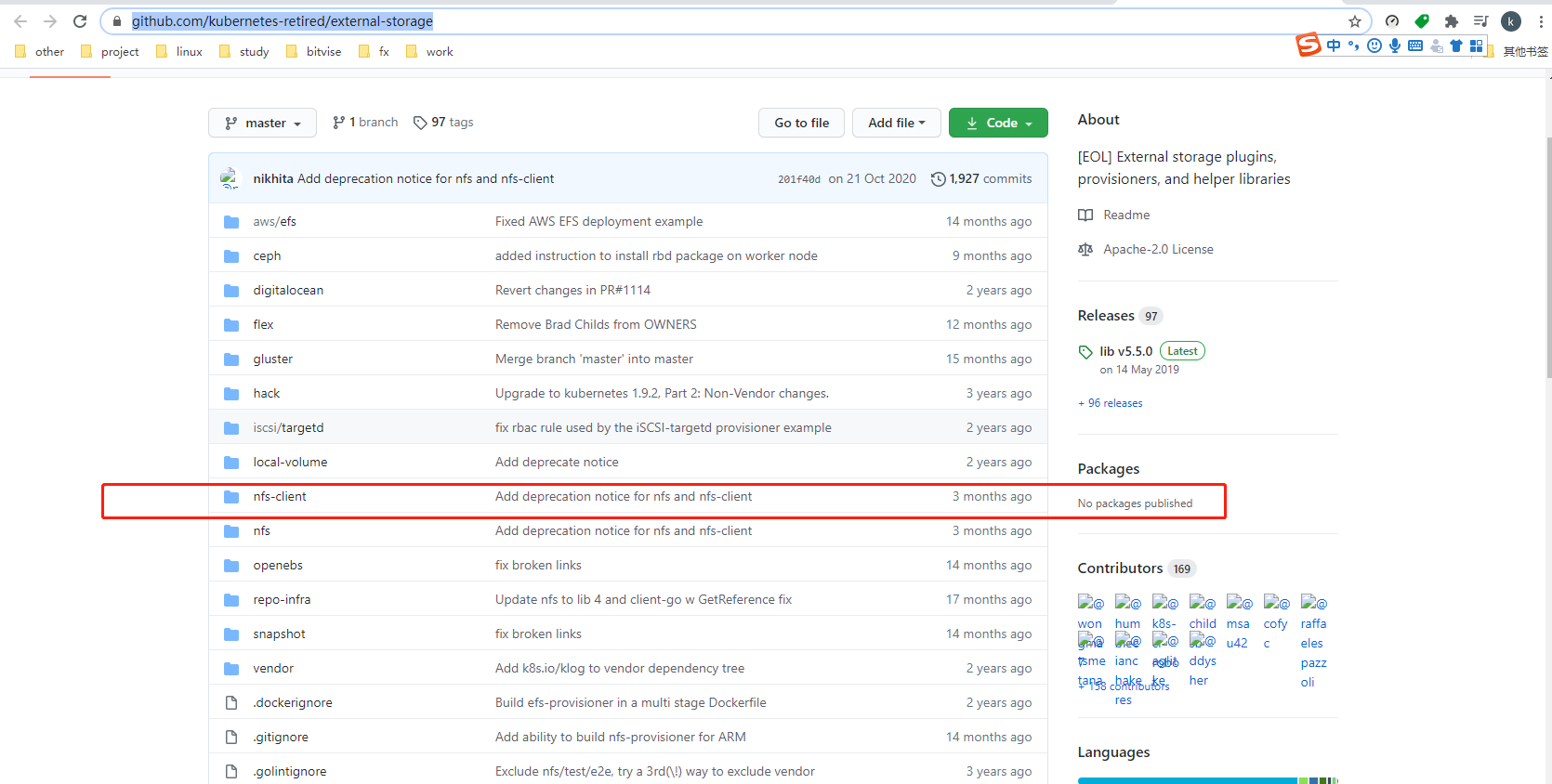

二、配置PV 动态供给(NFS StorageClass),创建pvc

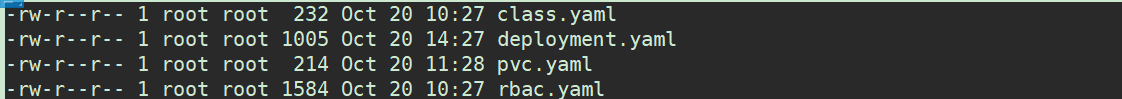

#部署NFS实现自动创建PV插件: 一共设计到4个yaml 文件 ,官方的文档有详细的说明。

https://github.com/kubernetes-incubator/external-storage

- root@k8s-master1:~#mkdir/root/pvc

- root@k8s-master1:~#cd/root/pvc

创建rbac.yaml 文件

- root@k8s-master1:pvc#catrbac.yaml

- kind:ServiceAccount

- apiVersion:v1

- metadata:

- name:nfs-client-provisioner

- ---

- kind:ClusterRole

- apiVersion:rbac.authorization.k8s.io/v1

- metadata:

- name:nfs-client-provisioner-runner

- rules:

- -apiGroups:[""]

- resources:["persistentvolumes"]

- verbs:["get","list","watch","create","delete"]

- -apiGroups:[""]

- resources:["persistentvolumeclaims"]

- verbs:["get","list","watch","update"]

- -apiGroups:["storage.k8s.io"]

- resources:["storageclasses"]

- verbs:["get","list","watch"]

- -apiGroups:[""]

- resources:["events"]

- verbs:["create","update","patch"]

- ---

- kind:ClusterRoleBinding

- apiVersion:rbac.authorization.k8s.io/v1

- metadata:

- name:run-nfs-client-provisioner

- subjects:

- -kind:ServiceAccount

- name:nfs-client-provisioner

- namespace:default

- roleRef:

- kind:ClusterRole

- name:nfs-client-provisioner-runner

- apiGroup:rbac.authorization.k8s.io

- ---

- kind:Role

- apiVersion:rbac.authorization.k8s.io/v1

- metadata:

- name:leader-locking-nfs-client-provisioner

- rules:

- -apiGroups:[""]

- resources:["endpoints"]

- verbs:["get","list","watch","create","update","patch"]

- ---

- kind:RoleBinding

- apiVersion:rbac.authorization.k8s.io/v1

- metadata:

- name:leader-locking-nfs-client-provisioner

- subjects:

- -kind:ServiceAccount

- name:nfs-client-provisioner

- #replacewithnamespacewhereprovisionerisdeployed

- namespace:default

- roleRef:

- kind:Role

- name:leader-locking-nfs-client-provisioner

- apiGroup:rbac.authorization.k8s.io

创建deployment.yaml 文件

#官方默认的镜像地址,国内可能无法下载,可以使用 image:

fxkjnj/nfs-client-provisioner:latest

#定义NFS 服务器的地址,共享目录名称

- root@k8s-master1:pvc#catdeployment.yaml

- apiVersion:v1

- kind:ServiceAccount

- metadata:

- name:nfs-client-provisioner

- ---

- kind:Deployment

- apiVersion:apps/v1

- metadata:

- name:nfs-client-provisioner

- spec:

- replicas:1

- strategy:

- type:Recreate

- selector:

- matchLabels:

- app:nfs-client-provisioner

- template:

- metadata:

- labels:

- app:nfs-client-provisioner

- spec:

- serviceAccountName:nfs-client-provisioner

- containers:

- -name:nfs-client-provisioner

- image:fxkjnj/nfs-client-provisioner:latest

- volumeMounts:

- -name:nfs-client-root

- mountPath:/persistentvolumes

- env:

- -name:PROVISIONER_NAME

- value:fuseim.pri/ifs

- -name:NFS_SERVER

- value:172.16.201.209

- -name:NFS_PATH

- value:/home/nfs

- volumes:

- -name:nfs-client-root

- nfs:

- server:172.16.201.209

- path:/home/nfs

创建class.yaml

# archiveOnDelete: "true" 表示当PVC 删除后,后端数据不直接删除,而是归档

- root@k8s-master1:pvc#catclass.yaml

- apiVersion:storage.k8s.io/v1

- kind:StorageClass

- metadata:

- name:managed-nfs-storage

- provisioner:fuseim.pri/ifs#orchooseanothername,mustmatchdeployment'senvPROVISIONER_NAME'

- parameters:

- archiveOnDelete:"true"

创建pvc.yaml

#指定storageClassName 存储卷的名字

# requests:

storage: 100Gi 指定需要多大的存储

#注意,这里pvc ,我们创建在redis 命名空间下了,如果没有redis 还需要先创建才行, kubectl create namespace redis

- root@k8s-master1:pvc#catpvc.yaml

- apiVersion:v1

- kind:PersistentVolumeClaim

- metadata:

- name:nfs-redis

- namespace:redis

- spec:

- storageClassName:"managed-nfs-storage"

- accessModes:

- -ReadWriteMany

- resources:

- requests:

- storage:100Gi

- #部署

- root@k8s-master1:pvc#kubectlapply-f.

- #查看存储卷

- root@k8s-master1:pvc#kubectlgetsc

- NAMEPROVISIONERRECLAIMPOLICYVOLUMEBINDINGMODEALLOWVOLUMEEXPANSIONAGE

- managed-nfs-storagefuseim.pri/ifsDeleteImmediatefalse25h

- #查看pvc

- root@k8s-master1:pvc#kubectlgetpvc-nredis

- NAMESTATUSVOLUMECAPACITYACCESSMODESSTORAGECLASSAGE

- nfs-redisBoundpvc-8eacbe25-3875-4f78-91ca-ba83b6967a8a100GiRWXmanaged-nfs-storage21h

三、编写redis yaml 文件

- root@k8s-master1:~#mkdir/root/redis

- root@k8s-master1:~#cd/root/redis

编写 redis.conf 配置文件,以configmap 的方式挂载到容器中

# require 配置redis 密码

#save 5 1 ,表示 每5秒有一个key 变动 就写入到 dump.rdb 文件中

# appendonly no ,表示下次可以使用dump.rdb 来恢复 redis 快照的数据

# 注意namespace 为redis

- root@k8s-master1:redis#catredis-configmap-rdb.yml

- kind:ConfigMap

- apiVersion:v1

- metadata:

- name:redis-config

- namespace:redis

- labels:

- app:redis

- data:

- redis.conf:|-

- protected-modeno

- port6379

- tcp-backlog511

- timeout0

- tcp-keepalive300

- daemonizeno

- supervisedno

- pidfile/data/redis_6379.pid

- loglevelnotice

- logfile""

- databases16

- always-show-logoyes

- save51

- save30010

- save6010000

- stop-writes-on-bgsave-erroryes

- rdbcompressionyes

- rdbchecksumyes

- dbfilenamedump.rdb

- dir/data

- replica-serve-stale-datayes

- replica-read-onlyyes

- repl-diskless-syncno

- repl-diskless-sync-delay5

- repl-disable-tcp-nodelayno

- replica-priority100

- requirepass123

- lazyfree-lazy-evictionno

- lazyfree-lazy-expireno

- lazyfree-lazy-server-delno

- replica-lazy-flushno

- appendonlyno

- appendfilename"appendonly.aof"

- appendfsynceverysec

- no-appendfsync-on-rewriteno

- auto-aof-rewrite-percentage100

- auto-aof-rewrite-min-size64mb

- aof-load-truncatedyes

- aof-use-rdb-preambleyes

- lua-time-limit5000

- slowlog-log-slower-than10000

- slowlog-max-len128

- latency-monitor-threshold0

- notify-keyspace-events""

- hash-max-ziplist-entries512

- hash-max-ziplist-value64

- list-max-ziplist-size-2

- list-compress-depth0

- set-max-intset-entries512

- zset-max-ziplist-entries128

- zset-max-ziplist-value64

- hll-sparse-max-bytes3000

- stream-node-max-bytes4096

- stream-node-max-entries100

- activerehashingyes

- client-output-buffer-limitnormal000

- client-output-buffer-limitreplica256mb64mb60

- client-output-buffer-limitpubsub32mb8mb60

- hz10

- dynamic-hzyes

- aof-rewrite-incremental-fsyncyes

- rdb-save-incremental-fsyncyes

编写 redis-deployment.yml

#注意namespace 为redis

- root@k8s-master1:redis#catredis-deployment.yml

- apiVersion:apps/v1

- kind:Deployment

- metadata:

- name:redis

- namespace:redis

- labels:

- app:redis

- spec:

- replicas:3

- selector:

- matchLabels:

- app:redis

- template:

- metadata:

- labels:

- app:redis

- spec:

- #进行初始化操作,修改系统配置,解决Redis启动时提示的警告信息

- initContainers:

- -name:system-init

- image:busybox:1.32

- imagePullPolicy:IfNotPresent

- command:

- -"sh"

- -"-c"

- -"echo2048>/proc/sys/net/core/somaxconn&&echonever>/sys/kernel/mm/transparent_hugepage/enabled"

- securityContext:

- privileged:true

- runAsUser:0

- volumeMounts:

- -name:sys

- mountPath:/sys

- containers:

- -name:redis

- image:redis:5.0.8

- command:

- -"sh"

- -"-c"

- -"redis-server/usr/local/etc/redis/redis.conf"

- ports:

- -containerPort:6379

- resources:

- limits:

- cpu:1000m

- memory:1024Mi

- requests:

- cpu:1000m

- memory:1024Mi

- livenessProbe:

- tcpSocket:

- port:6379

- initialDelaySeconds:300

- timeoutSeconds:1

- periodSeconds:10

- successThreshold:1

- failureThreshold:3

- readinessProbe:

- tcpSocket:

- port:6379

- initialDelaySeconds:5

- timeoutSeconds:1

- periodSeconds:10

- successThreshold:1

- failureThreshold:3

- volumeMounts:

- -name:data

- mountPath:/data

- -name:config

- mountPath:/usr/local/etc/redis/redis.conf

- subPath:redis.conf

- volumes:

- -name:data

- persistentVolumeClaim:

- claimName:nfs-redis

- -name:config

- configMap:

- name:redis-config

- -name:sys

- hostPath:

- path:/sys

编写 redis-service.yml

#注意namespace 为redis

- #部署

- root@k8s-master1:~/kubernetes/redis#kubectlgetpod-nredis

- NAMEREADYSTATUSRESTARTSAGE

- redis-65f75db6bc-5skgr1/1Running021h

- redis-65f75db6bc-75m8m1/1Running021h

- redis-65f75db6bc-cp6cx1/1Running021h

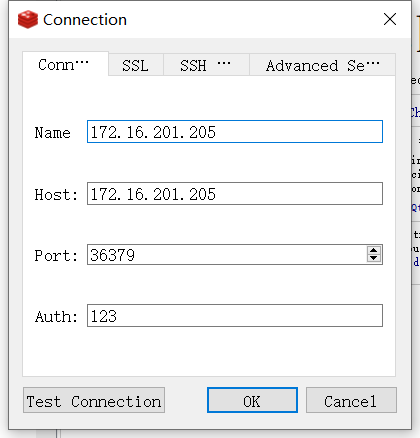

- root@k8s-master1:~/kubernetes/redis#kubectlgetsvc-nredis

- NAMETYPECLUSTER-IPEXTERNAL-IPPORT(S)AGE

-

redis-frontNodePort10.0.0.169

6379:36379/TCP22h

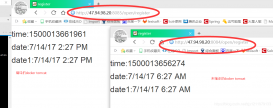

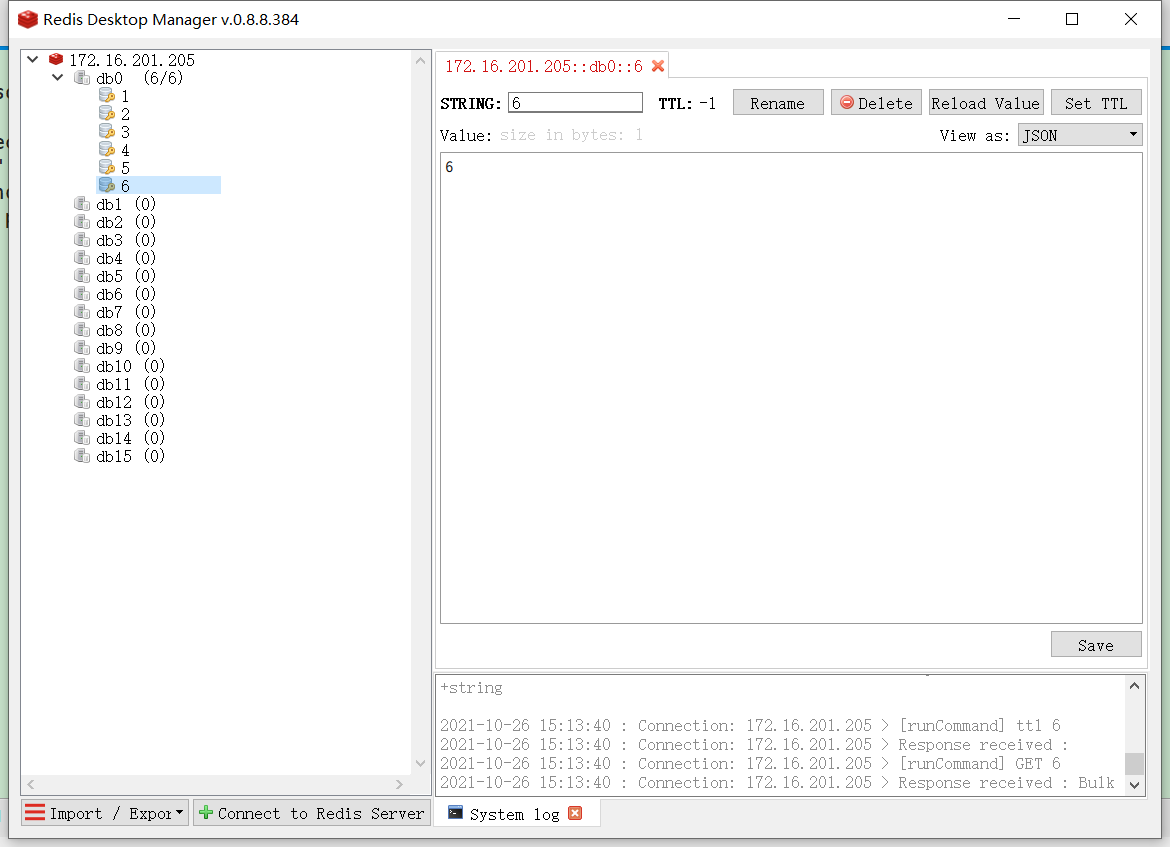

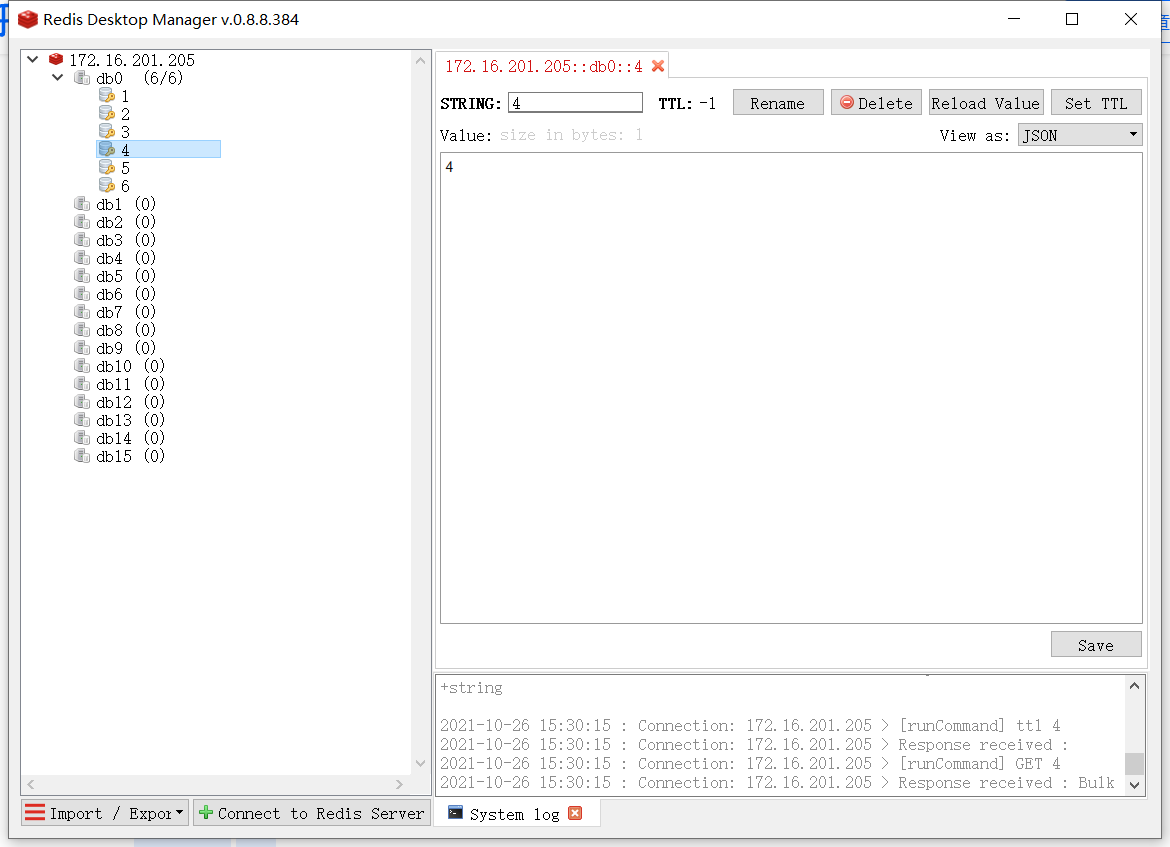

四、测试,访问

使用redis 客户端工具,写入几个KEY 测试

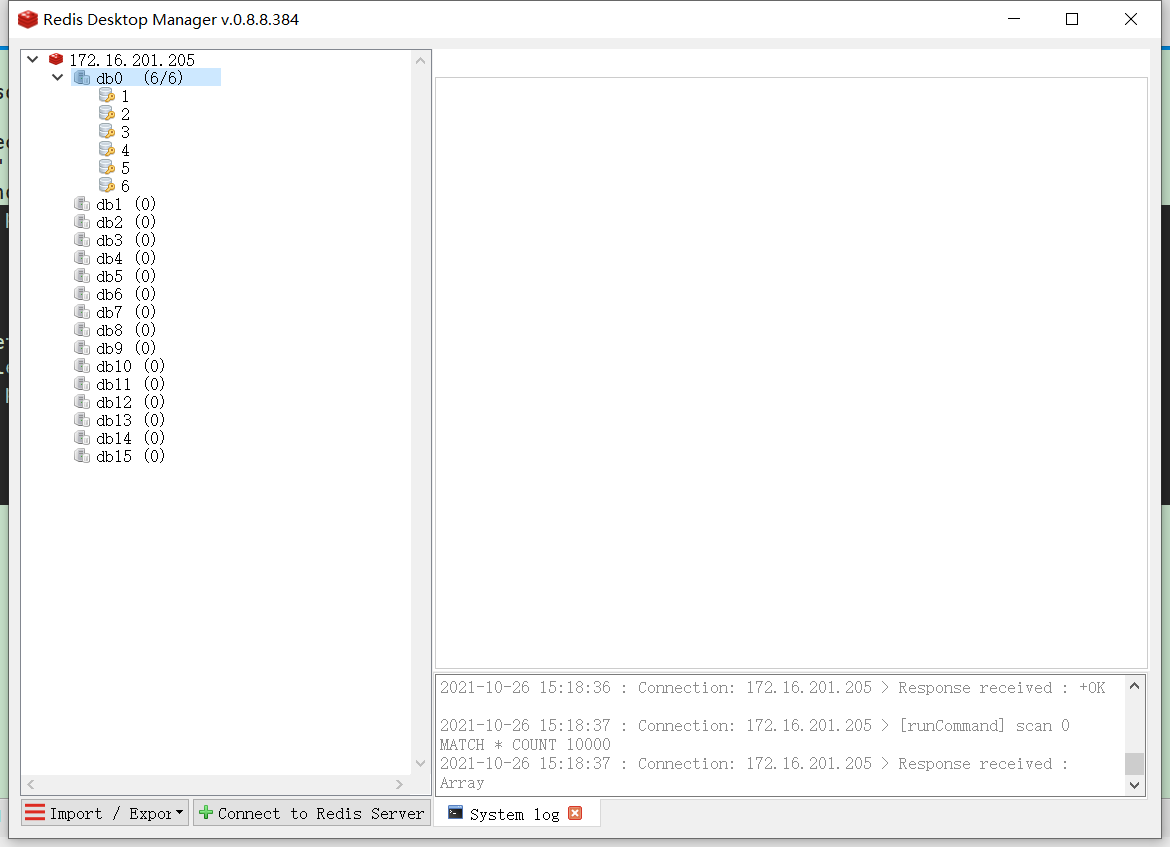

删除pod,在自动新建pod后,查询键值是否存在

- root@k8s-master1:~#kubectlgetpods-nredis

- NAMEREADYSTATUSRESTARTSAGE

- redis-65f75db6bc-5skgr1/1Running05d20h

- redis-65f75db6bc-75m8m1/1Running05d20h

- redis-65f75db6bc-cp6cx1/1Running05d20h

- root@k8s-master1:~#kubectldelete-nredispodredis-65f75db6bc-5skgr

- pod"redis-65f75db6bc-5skgr"deleted

- #删除pod后,根据副本数,又重新拉取新的pod生成

- root@k8s-master1:~#kubectlgetpods-nredis

- NAMEREADYSTATUSRESTARTSAGE

- redis-65f75db6bc-tnnxp1/1Running054s

- redis-65f75db6bc-75m8m1/1Running05d20h

- redis-65f75db6bc-cp6cx1/1Running05d20h

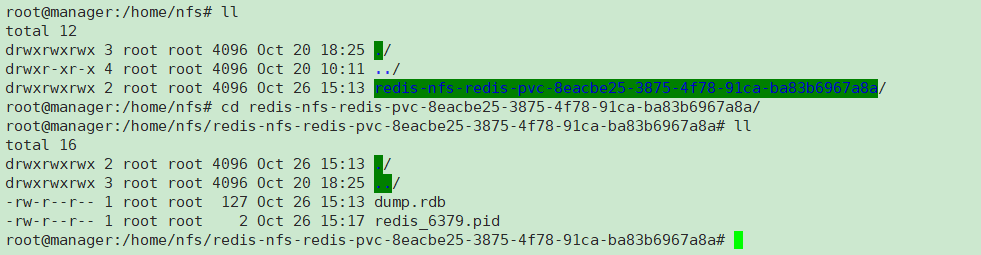

查看nfs共享目录下是否存在 dump.rdb

五、故障演练恢复

(1)数据备份

源redis配置有持久化,直接拷贝持久化目录下的dump.rdb

直接到持久化的目录下,拷贝走dump.rdb 文件

源redis不支持持久化,则进入容器生成dump.rdb并拷出

进入容器:kubectl exec -it redis-xxx /bin/bash -n redis

进入redis命令台:redis-cli

密码认证:auth 123

保存数据,生成dump.rdb文件:save

退出redis命令台:quit

退出容器:exit

从容器中取出数据到本地:kubectl cp -n redis Pod_Name:/data/dump.rdb ./

传输至远程主机:scp dump.rdb root@目标服务器:/目录

(2)数据恢复

- 停止redis,直接删除创建的deployment

- 拷贝dump.rdb至目标redis的持久化目录下(注:将覆盖目标redis的数据)

- 重启pod:kubectl apply -f redis-deployment.yml

- #拷贝持久化目录下的dump.rbd文件到root下

- cpdump.rdb/root

- #停止redis,也就是删除deployment

- root@k8s-master1:~/kubernetes/redis#kubectldelete-fredis-deployment.yml

- deployment.apps"redis"deleted

- root@k8s-master1:~/kubernetes/redis#kubectlgetpods-nredis

- Noresourcesfoundinredisnamespace.

- #拷贝dump.rdb至目标redis的持久化目录下

- cp/root/dump.rdb/home/nfs/redis-nfs-redis-pvc-8eacbe25-3875-4f78-91ca-ba83b6967a8a

- #重启pod

- root@k8s-master1:~/kubernetes/redis#kubectlapply-fredis-deployment.yml

- deployment.apps/rediscreated

- root@k8s-master1:~/kubernetes/redis#kubectlgetpods-nredis

- NAMEREADYSTATUSRESTARTSAGE

- redis-65f75db6bc-5jx4m0/1Init:0/103s

- redis-65f75db6bc-68jf50/1Init:0/103s

- redis-65f75db6bc-b9gvk0/1Init:0/103s

- root@k8s-master1:~/kubernetes/redis#kubectlgetpods-nredis

- NAMEREADYSTATUSRESTARTSAGE

- redis-65f75db6bc-5jx4m1/1Running020s

- redis-65f75db6bc-68jf51/1Running020s

- redis-65f75db6bc-b9gvk1/1Running020s

(3)验证数据,可发现源redis的数据已全部复现

原文链接:https://www.toutiao.com/a7023273935886205476/